Have you seen the new Netflix documentary Coded Bias yet? If not, be sure to catch up.

In this documentary film maker Shalini Kantayya follows M.I.T Media Lab computer scientist Joy Buolamwini, along with data scientists, mathematicians, and watchdog groups around the world, as they fight to expose the discrimination within facial recognition algorithms now prevalent across all spheres of daily life.

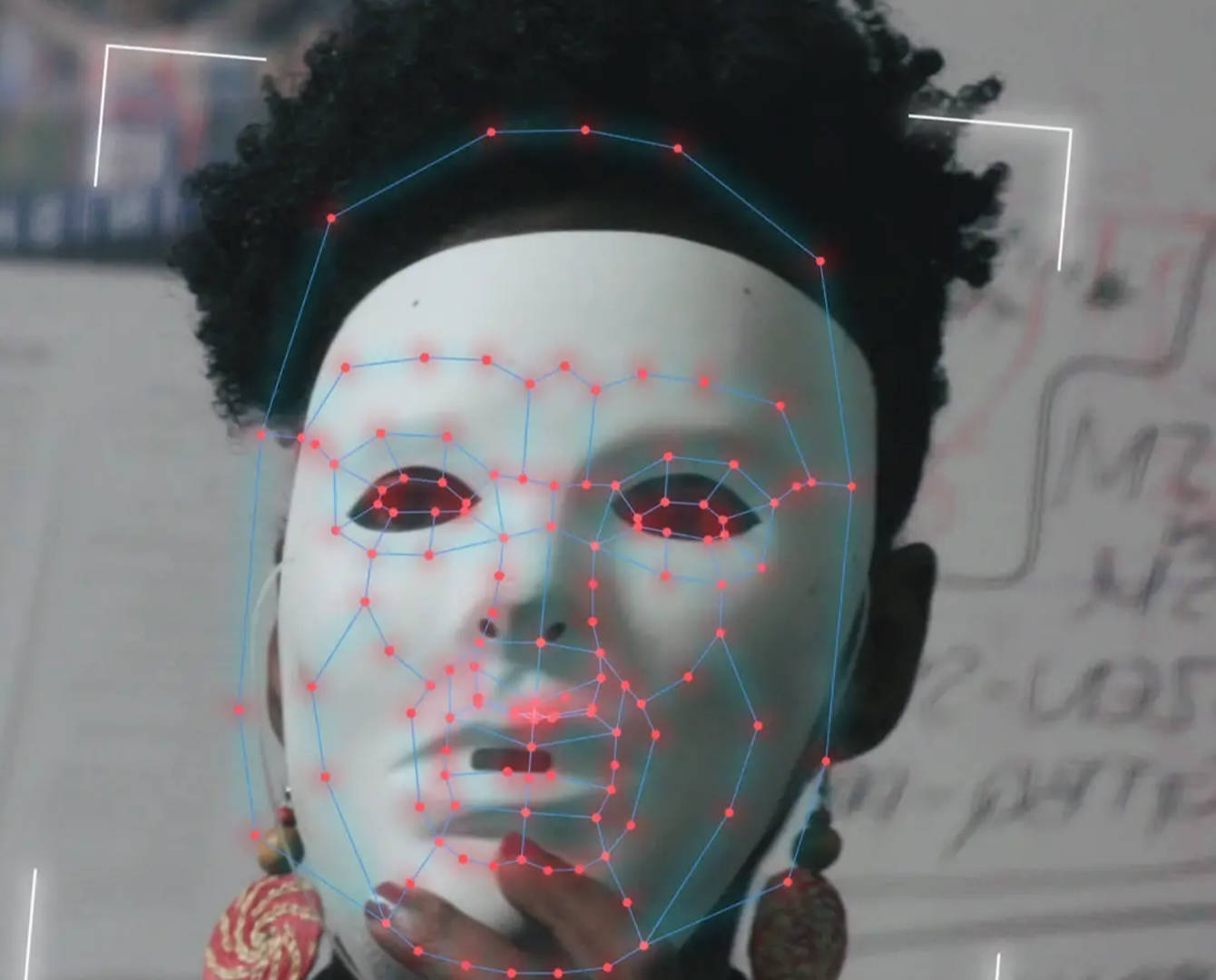

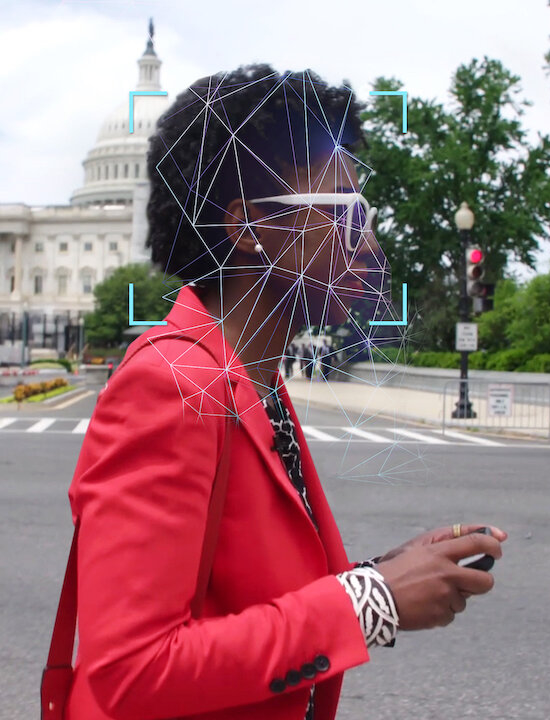

Modern society sits at the intersection of two crucial questions: What does it mean when artificial intelligence increasingly governs our liberties? And what are the consequences for the people A.I. is biased against? When MIT Media Lab researcher Joy Buolamwini discovers that many facial recognition technologies do not accurately detect darker-skinned faces or classify the faces of women, she delves into an investigation of widespread bias in algorithms. As it turns out, artificial intelligence is not neutral, and women are leading the charge to ensure our civil rights are protected.

Harvard Business Review says: “One of the biggest sources of anxiety about A.I. is not that it will turn against us, but that we simply cannot understand how it works. The solution to rogue systems that discriminate against women in credit applications or that make racist recommendations in criminal sentencing, or that reduce the number of black patients identified as needing extra medical care, might seem to be “explainable A.I.” But sometimes, what’s just as important as knowing “why” an algorithm made a decision, is being able to ask “what” it was being optimized for in the first place?”

In many large companies, fundamentally important decisions such as personnel decisions are made by artificial intelligence. Who is invited to job interviews, who is terminated? Coded Bias shows that the knowledge of artificial intelligence is fed by past experience and thus a positive further development towards a future free of prejudice is inhibited.

“When you think of A.I., it’s forward-looking, but A.I. is based on data, and data is a reflection of our history.”

Joy Buolamwini

The large use of A.I. will be a challenge for leaders. They will have to reveal the human decisions behind the design of their A.I. systems, what ethical and social concerns they took into account, the origins and methods by which they procured their training data, and how well they monitored the results of those systems for traces of bias or discriminations. Businesses need models that they can trust. Achieving transparency with AI systems is critical as our adoption grows. But the Artificial Intelligence black box problem is based on the inability to fully understand why the algorithms behind the AI work the way they do.