Shortened Version

We women are particularly familiar with prejudices; they accompany us from the cradle to the casket. Prejudices are the reason why women are still rare in leadership positions. Erasing prejudice is a slow process because pigeonholing, and shallow categorization is an important part of our evolutionary history. Even in our newest technologies, bias can be found. Algorithms, which increasingly influence and determine our lives, are a major barrier on the way to a prejudice-free(er) future.

WE ALL THINK IN CATEGORIZATIONS because the automatic classification of people and situations has a distinct evolutionary benefit: It simplifies decision-making processes and thus saves mental capacity and enables us to respond swiftly to recognizable scenarios. However, the biggest danger in categorizing people is it isn’t always fair. Afterall, we only recently learned the difference between structural and unconscious biases. Both are attitudes and beliefs that lie outside our consciousness.

The New Dimension of Pigeonhole Thinking: Al Bias

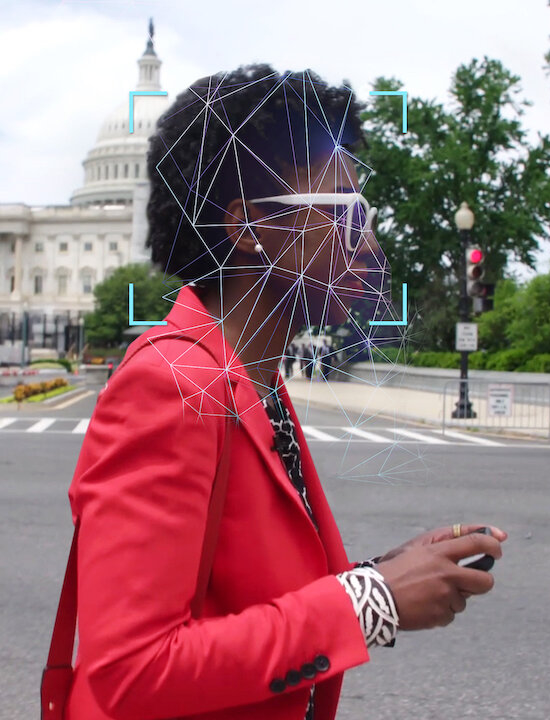

We live in a new digital age, and era of computers and artificial intelligence (AI). All social media platforms, most search engines and news websites are built on algorithms. AI systems are used, among other things, in most image recognition systems used by large companies, such as the image captioning AI used by Facebook, which recognizes whether a person, animal or object is in an image. It not only identifies people in pictures, but also recognizes whether the person is smiling, wearing accessories, standing or sitting, and the total number of people in a group picture. There is little doubt about the power of AI systems. The data analysis and pattern recognition enabled by Deep Learning enables AI to diagnose early-stage cancer with greater accuracy than human doctors. AI can save lives, change lives, but also destroy lives. And therein lies the problem. Above all, AI has one effect: it allows confirmation bias to grow uncontrolled and unchecked and shows us the world as we like it. Every day it confirms our beliefs, opinions, and preferences. We live in a perpetual echo chamber that is designed, coordinated, and updated constantly by AI. Worst of all it’s fun. That is terrifying.

Algorithmic biases are a completely new dimension, and we will not be able to avoid having to deal with them more closely. Artificial intelligence (AI) is shaping all our lives and we need to ask ourselves two questions in particular:

In many large companies, fundamentally important decisions, such as personnel decisions, are supported by artificial intelligence. For example, AI pre-selects who will be invited for interviews. The knowledge of the algorithms that carry such decisions can be fed by flawed, unrepresentative, and prejudiced past experiences. This has the potential to inhibit positive future development towards an unbiased future. Explained less abstractly, when an algorithm decides who should be shortlisted for an advertised executive position, it preemptively eliminates people with immigrant backgrounds, people with disabilities, and women because it relies on data-based empirical values. For an algorithm, middle-aged white men are a safe choice. AI systems cannot address ethical and social concerns unless they are programmed accordingly. And that’s exactly what’s lacking in the male-dominated IT industry.

It’s not the algorithm’s fault

AI bias is generally unintentional. Artificial intelligence is not evil. But the consequences of its decisions can be significant: poor customer service, lower sales and revenue, unfair and even illegal actions. And: they can even lead to potentially dangerous conditions.

YouTube Tipp: Lo and Behold Clip – The Evolution of AI

https://www.youtube.com/watch?v=_ChGhnbCy6g

In a conversation with director Werner Herzog about the dangers of AI, Tesla founder and AI skeptic Elon Musk gave a compelling example: Suppose an artificial intelligence was tasked with maximizing the value of an investment portfolio. Suppose also that the system’s creators did not clearly specify how to achieve that goal. Theoretically, in this case, the machine intelligence could invest more in defense stocks, triggering a war in the worst-case scenario.

Watchout! The Blackbox Problem

The greatest danger of AI-systems is the “Blackbox Problem” — we are now unable to fully understand why the algorithms behind AI work the way they do.

Our brain is the most vital, but at the same time the most complex and enigmatic of our organs. Artificial intelligence, which represents the pinnacle of human technological development, is – despite us creating it – in many ways a mystery. We do know a great deal about our brains, and we can predict with some certainty how they will respond to various stimuli. Likewise, we can know with some certainty what results an AI algorithm will produce given certain inputs. The mystery facing scientists and researchers is not that of the output, but how it is generated. This lack of knowledge about the inner workings of the “black box” of artificial intelligence is the biggest hurdle in AI development and will be with us for a long time, if not forever.

The widespread use of artificial intelligence will be a particular challenge for humanity. Organizations that use AI will need to disclose the human decisions behind the design of their AI systems, as well as what ethical and social concerns they have considered, and how well they have monitored the results of those systems for traces of bias or discrimination. We need models we can trust. Achieving transparency in AI systems is critical. And we need one thing above all else: more diversity in computer science.

But what does that mean in concrete terms? What can I do?

- We must become digitally literate; intensively engage with the technologies we use and understand them as well as possible. We must not leave the field to the men.

- It is our mission and task to question AI models, we must insist that job advertisements are written in a gender-neutral way and that colleagues review the selection of AI and that diverse data is used as a basis.

- A conflicting issue for many but incredibly important: AI learns from our language. Therefore, it is even more important to gender. Changing the way we use language is an important milestone in the elimination of bias.

Film recommendation on the topic

In the documentary Coded Bias, filmmaker Shalini Kantayya follows computer scientist Joy Buolamwini of the M.I.T Media Lab and data scientists, mathematicians, and watchdog groups around the world to expose the discrimination caused by facial recognition algorithms that are now prevalent in all aspects of daily life. This documentary can be seen on Netflix.

“When you think of A.I., it’s forward-looking, but A.I. is based on data, and data is a reflection of our history.”

Joy Buolamwini